Email Scraper Software

Content

Here we are going to perform web scraping by using selenium and its Python bindings. You can study more about Selenium with Java on the hyperlink Selenium. A very essential component of net scraper, net crawler module, is used to navigate the goal website by making HTTP or HTTPS request to the URLs. The crawler downloads the unstructured knowledge (HTML contents) and passes it to extractor, the next module. The answer to the second query is a bit difficult, because there are many methods to get data. When that happens, the program can just print an error message and move on with out downloading the picture. Next is parsing the data and extracting all anchor links from the web page. As we iterate by way of the anchors, we have to retailer the outcomes into an inventory. Google.com house page.Ever since Google Web Search API deprecation in 2011, I’ve been searching for an alternate. I need a method to get links from Google search into my Python script. So I made my own, and here is a quick guide on scraping Google searches with requests and Beautiful Soup.

Python Loops – While, For And Nested Loops In Python Programming

But there could be occasions when you need to collect information from a website that doesn't present a selected API. This is where having the ability to carry out internet scraping is useful. As a data scientist, you possibly can code a simple Python script and extract the information you’re looking for. The best way to take away html tags is to make use of Beautiful Soup, and it takes just one line of code to do this. Pass the string of curiosity into BeautifulSoup() and use the get_text() method to extract the text without html tags. First of all, to get the HTML source code of the online web page, ship an HTTP request to the URL of that internet page one desires to access. The server responds to the request by returning the HTML content material of the webpage. For doing this task, one will use a third-get together HTTP library known as requests in python. For instance, requests-html is a project created by the creator of the requests library that permits you to easily render JavaScript using syntax that’s similar to the syntax in requests. It additionally consists of capabilities for parsing the information through the use of Beautiful Soup underneath the hood. By now, you’ve successfully harnessed the power and consumer-pleasant design of Python’s requests library. With just a few strains of code, you managed to scrape the static HTML content material from the web and make it out there for further processing.

Canada Vape Shop Database

— Creative Bear Tech (@CreativeBearTec) March 29, 2020

Our Canada Vape Shop Database is ideal for vape wholesalers, vape mod and vape hardware as well as e-liquid manufacturers and brands that are looking to connect with vape shops.https://t.co/0687q3JXzi pic.twitter.com/LpB0aLMTKk

This object takes as its arguments the web page.textual content doc from Requests (the content material of the server’s response) and then parses it from Python’s constructed-in html.parser. Within this file, we will begin to import the libraries we’ll be utilizing — Requests and Beautiful Soup. The Internet Archive is a non-profit digital library that provides free entry to web search engine api websites and different digital media. The Internet Archive is an effective software to keep in mind when doing any type of historic knowledge scraping, including comparing across iterations of the same website and out there knowledge. This makes it essential to know about the type of knowledge we're going to retailer domestically. First, we need to import Python libraries for scraping, here we're working with requests, and boto3 saving knowledge to S3 bucket. When you run the code for net scraping, a request is distributed to the URL that you have mentioned. As a response to the request, the server sends the data and lets you read the HTML or XML page. There are a number of different types of requests we will make using requests, of which GET is just one. In this tutorial, we’ll show you how to carry out internet scraping using Python three and the BeautifulSoup library. We’ll be scraping climate forecasts from the National Weather Service, and then analyzing them using the Pandas library. During your second attempt, you can even explore additional options of Beautiful Soup. A few XKCD pages have special content material that isn’t a simple image file. If your selector doesn’t find any parts, then soup.choose('#comic img') will return a blank record. samranga.blogspot.com/2015/08/web-scraping-beginner-python.html It uses beautiful soup python library for internet scraping with python. This tutorial went by way of using Python and Beautiful Soup to scrape information from a website. We can do this with Beautiful Soup’s .contents, which is able to return the tag’s children as a Python list data sort. The Requests library permits you to make use of HTTP inside your Python programs in a human readable means, and the Beautiful Soup module is designed to get internet scraping carried out quickly. In the case of a dynamic web site, you’ll end up with some JavaScript code, which you received’t have the ability to parse utilizing Beautiful Soup. The only approach to go from the JavaScript code to the content you’re interested in is to execute the code, similar to your browser does. The requests library can’t try this for you, but there are different solutions that can. The incredible amount of information on the Internet is a wealthy resource for any area of research or personal curiosity. To effectively harvest that information, you’ll need to turn out to be expert at web scraping. In this tutorial, you carried out internet scraping utilizing Python. You used the Beautiful Soup library to parse html knowledge and convert it into a kind that can be used for evaluation. After this tutorial, you need to have the ability to use Python to easily scrape data from the online, apply cleansing techniques and extract helpful insights from the info. import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns %matplotlib inline To perform internet scraping, you should also import the libraries proven under. The Beautiful Soup package deal is used to extract data from html recordsdata. The soup object incorporates all the info within the nested structure which might be programmatically extracted. In our example, we are scraping a webpage consisting of some quotes. So, we would like to create a program to save these quotes (and all related details about them). The Python libraries requests and Beautiful Soup are powerful instruments for the job. If you like to be taught with palms-on examples and you have a primary understanding of Python and HTML, then this tutorial is for you. Hope this submit may be useful to someone regarding this.

Python Questions

Your browser runs JavaScript and loads any content usually, and that what we'll do utilizing our second scraping library, which is called Selenium. I assume that you've got some background in Python basics, so let’s set up our first Python scraping library, which is Beautiful Soup. The last step is to retailer the extracted information in the CSV file. Here, for each card, we will extract the Hotel Name and Price and store it in a Python dictionary. The code then, parses the HTML or XML web page, finds the information and extracts it. Web scraping is an automated methodology used to extract massive amounts of data from web sites. Now that you have given the select() methodology in BeautifulSoup a brief test drive, how do you find out what to supply to select()? The quickest means is to step out of Python and into your internet browser’s developer instruments. You can use your browser to look at the doc in some detail. I normally search for id or class element attributes or another data that uniquely identifies the information I want to extract. I like to make use of Selenium and Beautiful Soup together though they overlap in performance.

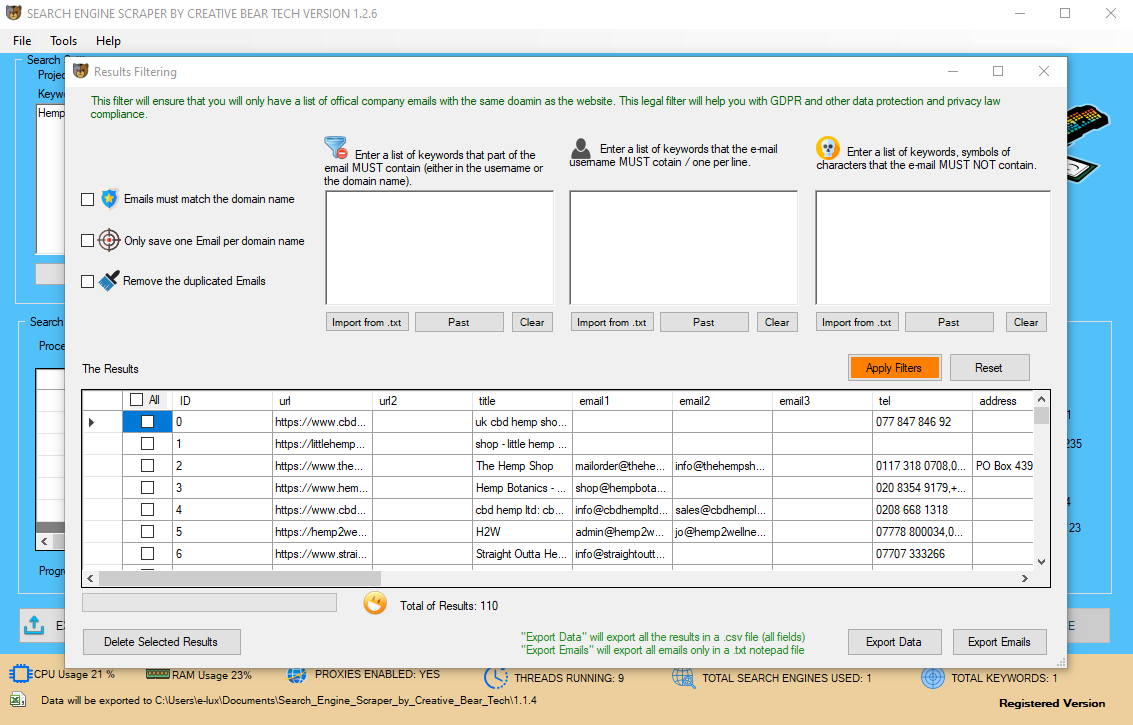

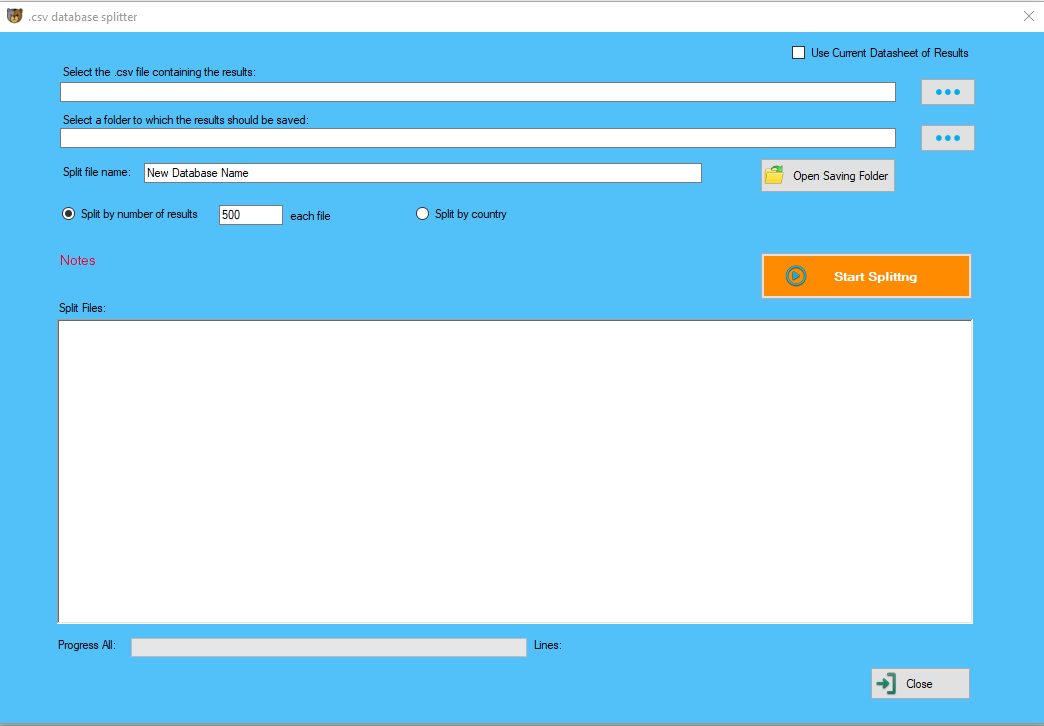

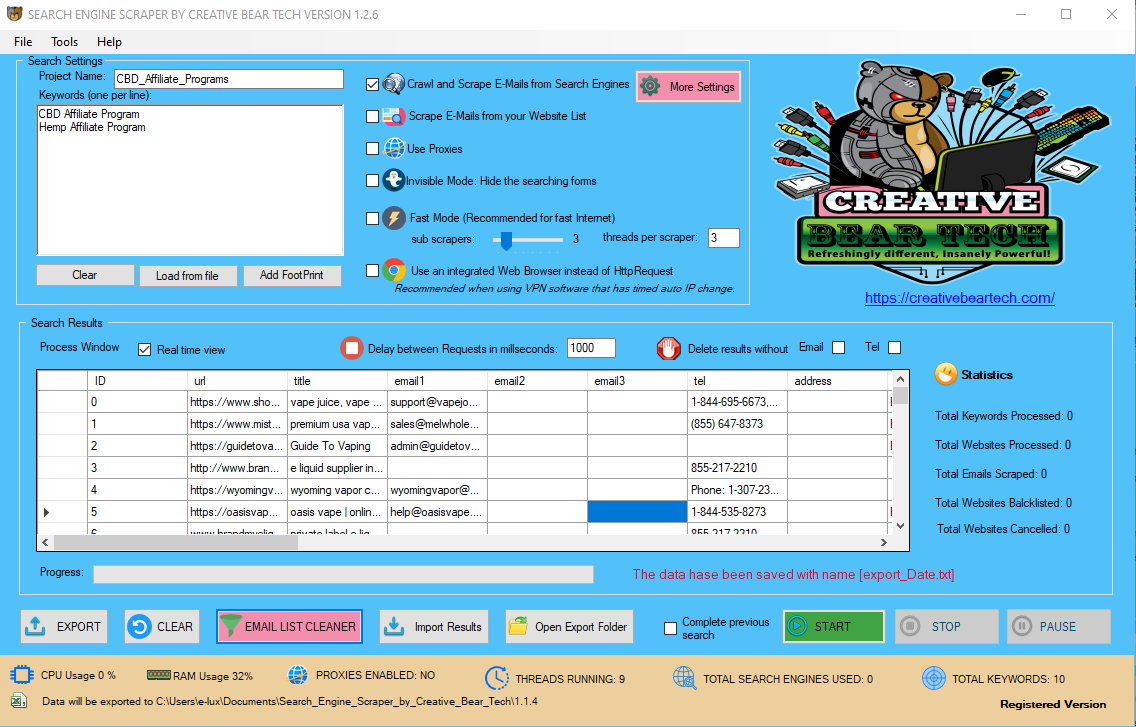

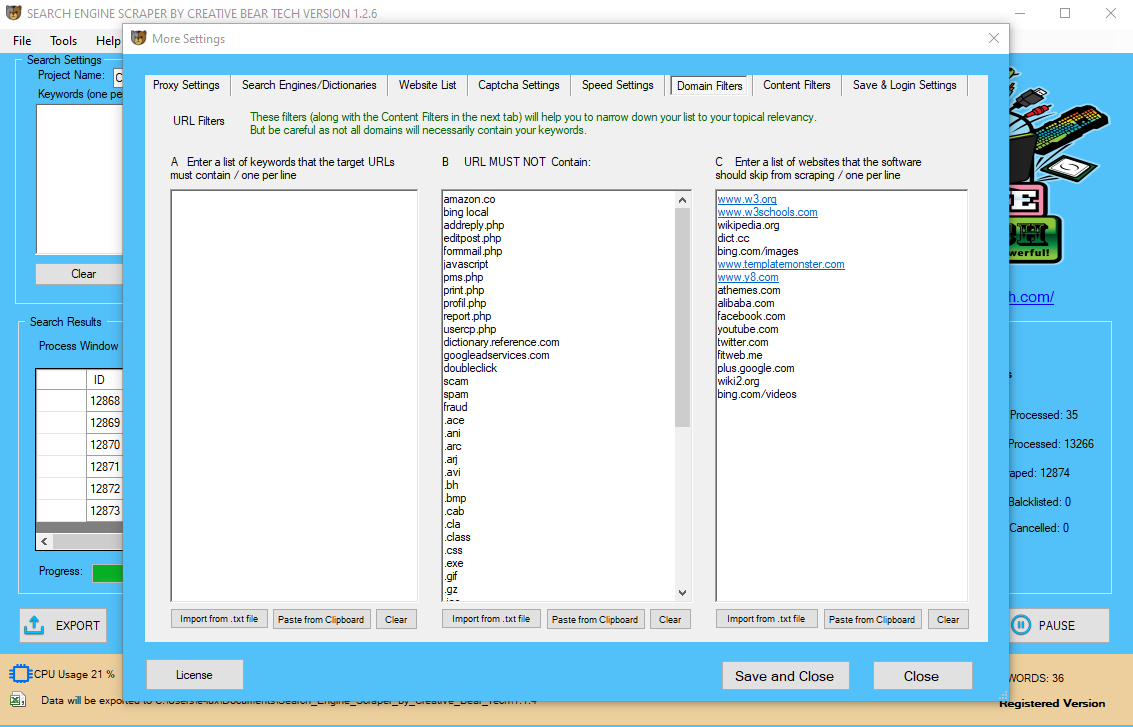

Search Engine Scraper and Email Extractor by Creative Bear Tech. Scrape Google Maps, Google, Bing, LinkedIn, Facebook, Instagram, Yelp and website lists.https://t.co/wQ3PtYVaNv pic.twitter.com/bSZzcyL7w0

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

In Python, Web scraping could be done simply by using scraping tools like BeautifulSoup. But what if the consumer is concerned about efficiency of scraper or need to scrape data efficiently. We’ll now create a BeautifulSoup object, or a parse tree.

In Python, Web scraping could be done simply by using scraping tools like BeautifulSoup. But what if the consumer is concerned about efficiency of scraper or need to scrape data efficiently. We’ll now create a BeautifulSoup object, or a parse tree.

- In this tutorial, you performed web scraping using Python.

- After this tutorial, you need to be able to use Python to simply scrape information from the online, apply cleaning strategies and extract helpful insights from the data.

- You used the Beautiful Soup library to parse html data and convert it right into a type that can be used for analysis.

- The Beautiful Soup library's name is bs4 which stands for Beautiful Soup, version 4.

- The Beautiful Soup package deal is used to extract knowledge from html files.

In earlier chapters, we realized about extracting the data from net pages or internet scraping by various Python modules. In this chapter, let us look into numerous strategies to course of the info that has been scraped. It is an open source automated testing suite for web functions throughout totally different browsers and platforms. We have selenium bindings for Python, Java, C#, Ruby and JavaScript.

In earlier chapters, we realized about extracting the data from net pages or internet scraping by various Python modules. In this chapter, let us look into numerous strategies to course of the info that has been scraped. It is an open source automated testing suite for web functions throughout totally different browsers and platforms. We have selenium bindings for Python, Java, C#, Ruby and JavaScript.  Web scraping helps acquire these unstructured knowledge and retailer it in a structured form. There are different ways to scrape websites corresponding to on-line Services, APIs or writing your own code. In this text, we’ll see tips on how to implement web scraping with python. The Beautiful Soup library's name is bs4 which stands for Beautiful Soup, model 4. When you employ requests, you’ll solely receive what the server sends again. It is a Python library for pulling knowledge out of HTML and XML recordsdata. In principle, net scraping is the practice of gathering knowledge via any means apart from a program interacting with an API (or, clearly, via a human utilizing a web browser). In this tutorial, we are going to speak about Python internet scraping and tips on how to scrape internet pages utilizing multiple libraries similar to Beautiful Soup, Selenium, and some other magic instruments like PhantomJS. If there is js running, you wont have the ability to scrape utilizing requests and bs4 immediately. You might get the api link then parse the JSON to get the knowledge you need or strive selenium. It is an efficient HTTP library used for accessing internet pages.

Web scraping helps acquire these unstructured knowledge and retailer it in a structured form. There are different ways to scrape websites corresponding to on-line Services, APIs or writing your own code. In this text, we’ll see tips on how to implement web scraping with python. The Beautiful Soup library's name is bs4 which stands for Beautiful Soup, model 4. When you employ requests, you’ll solely receive what the server sends again. It is a Python library for pulling knowledge out of HTML and XML recordsdata. In principle, net scraping is the practice of gathering knowledge via any means apart from a program interacting with an API (or, clearly, via a human utilizing a web browser). In this tutorial, we are going to speak about Python internet scraping and tips on how to scrape internet pages utilizing multiple libraries similar to Beautiful Soup, Selenium, and some other magic instruments like PhantomJS. If there is js running, you wont have the ability to scrape utilizing requests and bs4 immediately. You might get the api link then parse the JSON to get the knowledge you need or strive selenium. It is an efficient HTTP library used for accessing internet pages.

With the help of Requests, we will get the raw HTML of web pages which might then be parsed for retrieving the info. Before utilizing requests, allow us to understand its installation. Out of the field, Python comes with two constructed-in modules, urllib and urllib2, designed to deal with the HTTP requests. Requestsis a python library designed to simplify the process of creating HTTP requests. Now, we would like to extract some useful data from the HTML content material. BeautifulSoup is a Python library that is used to drag information of HTML and XML recordsdata. It works with the parser to offer a natural way of navigating, looking out, and modifying the parse tree. In this tutorial, you'll learn to extract information from the web, manipulate and clean information using Python's Pandas library, and information visualize using Python's Matplotlib library.

Install Beautiful Soup

Exercising and Running Outside during Covid-19 (Coronavirus) Lockdown with CBD Oil Tinctures https://t.co/ZcOGpdHQa0 @JustCbd pic.twitter.com/emZMsrbrCk

— Creative Bear Tech (@CreativeBearTec) May 14, 2020

We are using Urllib3 on the place of requests library for getting the raw information (HTML) from web page. Then we're using BeautifulSoup for parsing that HTML information. Using the Requests library is good for the primary a part of the web scraping course of (retrieving the online web page data). Now, all we have to do is navigating and looking out the parse tree that we created, i.e. tree traversal. For this task, we shall be using another third-get together python library, Beautiful Soup.

Extracting All The Information From The Page

In basic, we may get information from a database or knowledge file and different sources. But what if we want large amount of data that is out there on-line? One approach to get such kind of data is to manually search (clicking away in a web browser) and save (copy-pasting right into a spreadsheet or file) the required knowledge. Selenium can click via webpage, submit passwords, and extract information however Beautiful Soup much simpler to make use of…together they work very nicely for a number of use circumstances. Nowadays data is everything and if somebody wants to get data from webpages then a method to make use of an API or implement Web Scraping strategies. The first step in web scraping is to navigate to the goal website and obtain the source code of the online page. A couple of other libraries to make requests and obtain the source code are http.shopper and urlib2. Use the documentation as your guidebook and inspiration. Additional apply will help you become more adept at internet scraping utilizing Python, requests, and Beautiful Soup. You’ve successfully scraped some HTML from the Internet, but if you have a look at it now, it just seems like an enormous mess. There are tons of HTML components here and there, 1000's of attributes scattered round—and wasn’t there some JavaScript mixed in as nicely? It’s time to parse this prolonged code response with Beautiful Soup to make it more accessible and select the information that you’re thinking about. The soup object contains all the info in a nested structure that might be programmatically extracted. In our example, we're scraping an online web page incorporates a headline and its corresponding website. The very first thing we’ll have Best Data Extraction Software to do to scrape an online web page is to download the web page. We can obtain pages using the Python requests library. The requests library will make a GET request to an online server, which will obtain the HTML contents of a given internet page for us. In the earlier chapter, we now have seen tips on how to deal with movies and pictures that we get hold of as part of internet scraping content material. In this chapter we're going to take care of text analysis by utilizing Python library and can learn about this in detail. The net media content material that we obtain during scraping can be photographs, audio and video information, within the form of non-net pages as well as information files. But, can we trust the downloaded knowledge particularly on the extension of knowledge we're going to obtain and retailer in our pc reminiscence?

Explode your B2B sales with our Global Vape Shop Database and Vape Store Email List. Our Global Vape Shop Database contains contact details of over 22,000 cbd and vape storeshttps://t.co/EL3bPjdO91 pic.twitter.com/JbEH006Kc1

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Many knowledge evaluation, big knowledge, and machine studying initiatives require scraping websites to assemble the information that you simply’ll be working with. The Python programming language is broadly used within the data science neighborhood, and due to this fact has an ecosystem of modules and tools that you need to use in your own tasks. In this tutorial we shall be specializing in the Beautiful Soup module. In the next instance, we're scraping the net page through the use of Urllib3 and BeautifulSoup.  While you had been inspecting the web page, you found that the hyperlink is part of the component that has the title HTML class. The current code strips away the complete link when accessing the .text attribute of its parent element. As you’ve seen before, .text only accommodates the visible text content material of an HTML component. To get the precise URL, you want to extract a kind of attributes instead of discarding it. Before beginning give one hour of time to undergo the documentation, it'll clear up most of your doubts.

While you had been inspecting the web page, you found that the hyperlink is part of the component that has the title HTML class. The current code strips away the complete link when accessing the .text attribute of its parent element. As you’ve seen before, .text only accommodates the visible text content material of an HTML component. To get the precise URL, you want to extract a kind of attributes instead of discarding it. Before beginning give one hour of time to undergo the documentation, it'll clear up most of your doubts.

Exercise at Home to Avoid the Gym During Coronavirus (COVID-19) with Extra Strength CBD Pain Cream https://t.co/QJGaOU3KYi @JustCbd pic.twitter.com/kRdhyJr2EJ

— Creative Bear Tech (@CreativeBearTec) May 14, 2020